Lightsaber: watson orchestrate 2.0

Watson Orchestrate is an enterprise tool offered through IBM Business Automation. It is a “digital worker”—like Siri, or any digital assistant, but for businesses—that works alongside its human colleagues. Powered by AI, it can connect apps, create automations, and offload menial tasks.

“Lightsaber” is the new iteration of Watson Orchestrate. The previous iteration can be seen here.

My role: UX designer of the admin experience

My team: Fabienne Schoemann, team lead; Myriam Battelli, visuals

Skills honed: Dashboard creation, data visualization, user testing, enterprise software design, information architecture, admin consoles

Status: Handed off to development, product is live

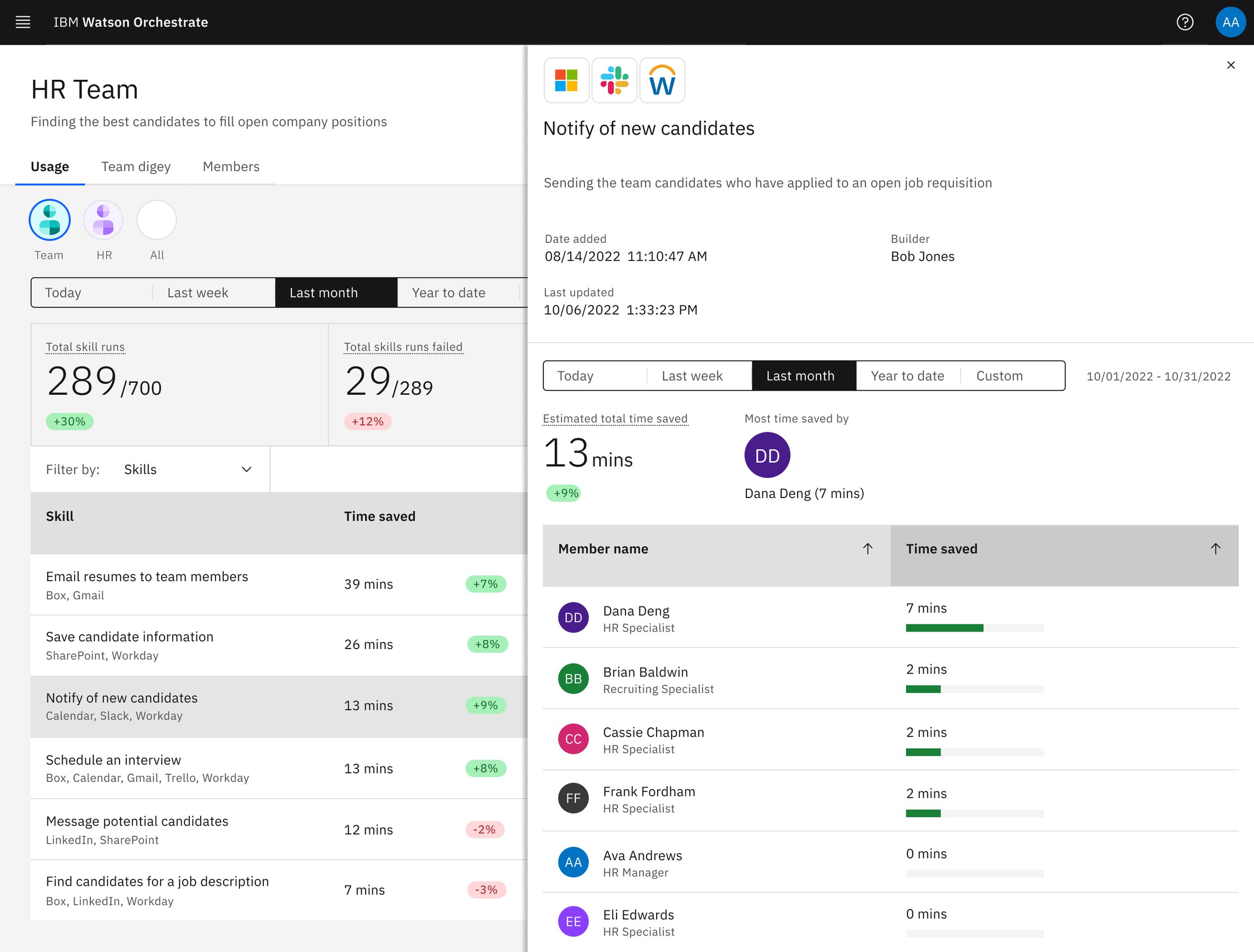

The three tabs of the Watson Orchestrate admin experience. Visual design by Myriam Battelli.

Project Overview

the next gen ai experience

Watson Orchestrate, old vs. new. Visual designs by Sapna Patel and Kylee Barnard.

Like the previous iteration of Watson Orchestrate, this digital worker—also called a digey—uses a chat interface to match user requests to relevant skills. Skills include actions like drafting proposals and sending emails, or integrations with apps like SAP, Workday, and more. The skills let Watson Orchestrate accomplish the request.

My team was tasked with creating the admin experience.

How Watson Orchestrate works for the end user: The user types their request in the chat, and is shown relevant skills. Home page design by Kylee Barnard, Sarah Burwood, Eva Dage, and Vikki Paterson.

project goals

Create a digital worker experience that seamlessly integrates with a human team’s existing processes

Create an admin experience that allows the admin to assess their team, find and fix errors quickly, and add and remove team members and skills

project challenges

Syncing with a massive team that had designers in the US, Canada, Italy, UK, Ireland, Germany, and India

Only 6 months to pull off what’s never been done before at IBM

Research

the ADMIN user and her pain points

Meet Ava. Ava manages a team of recruiters and HR specialists. Her goal: for her team to find and sign the best candidates for open roles before the competition snaps them up. This persona was validated through interviewing 11 automation managers and 6 rounds of user testing.

insights about ava

Managers are low-code, but have a passing understanding of automation

There is no consensus in how companies approach recruiting, candidate sourcing, talent acquisition, and HR—sometimes they’re separate departments, sometimes they’re all HR

Greatest pain points are juggling multiple tools and inefficient processes

As manager, Ava is the admin for her team and their team digital worker, adding and removing skills and members that end users interact with.

Iteration & testing

usage dashboard, managing skills, and managing members

For every team, there is a team digital worker with access to that team’s apps and data. As the admin, Ava oversees the team digey.

Ava’s admin experience covers three parts:

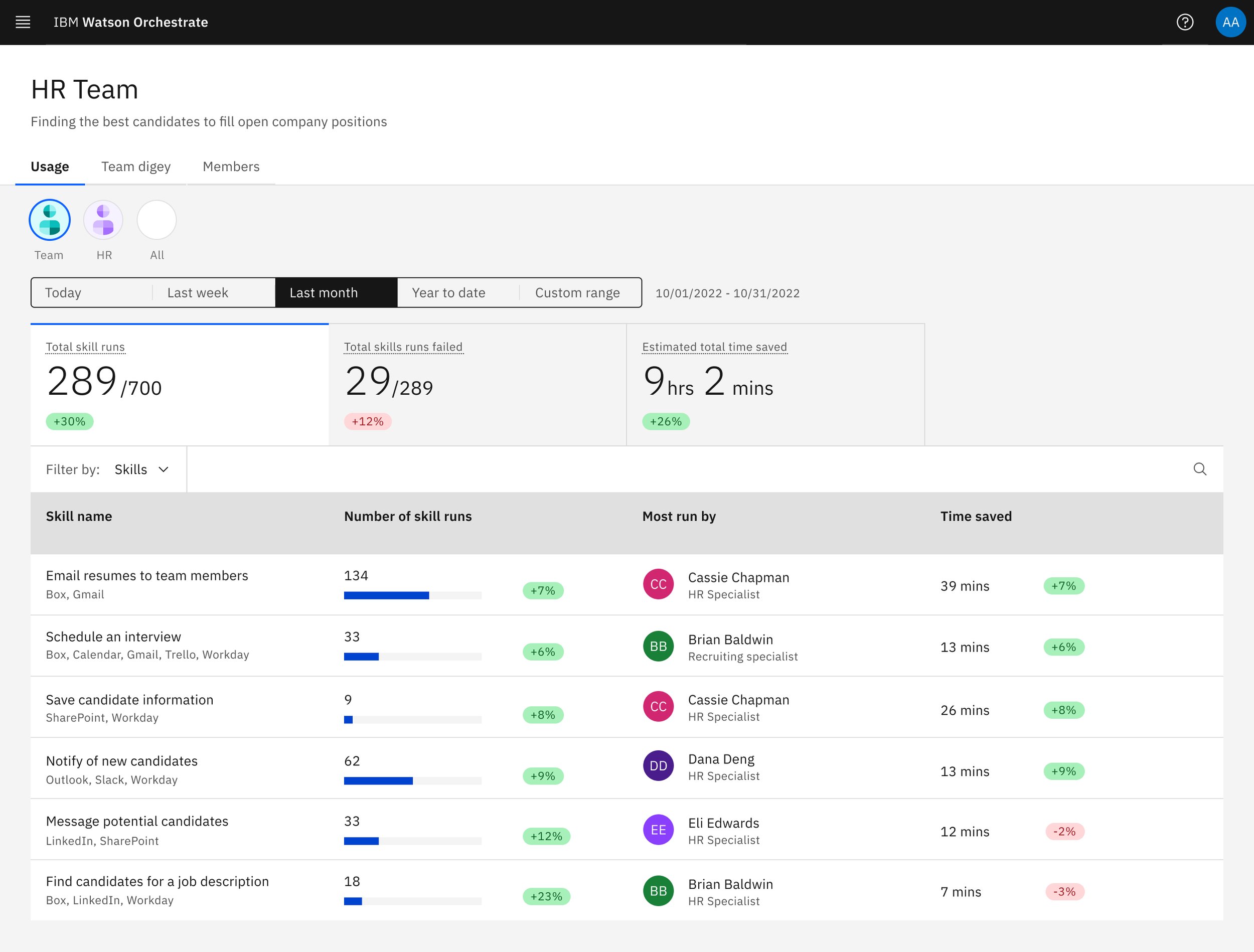

A usage dashboard where Ava can see the skills used and her team members’ usage, as well as find and fix errors

A place to manage the digey’s skills where she can add skills to the digey from a skill catalog

A place to manage team member access where she can add and delete people to the digey

Information architecture showing the navigation of Watson Orchestrate. My team covered everything in green.

the usage dashboard

Early dashboard explorations. The design team favored the middle, with the tabs.

The ideal dashboard experience shows Ava, at a glance:

What skills are being used most often

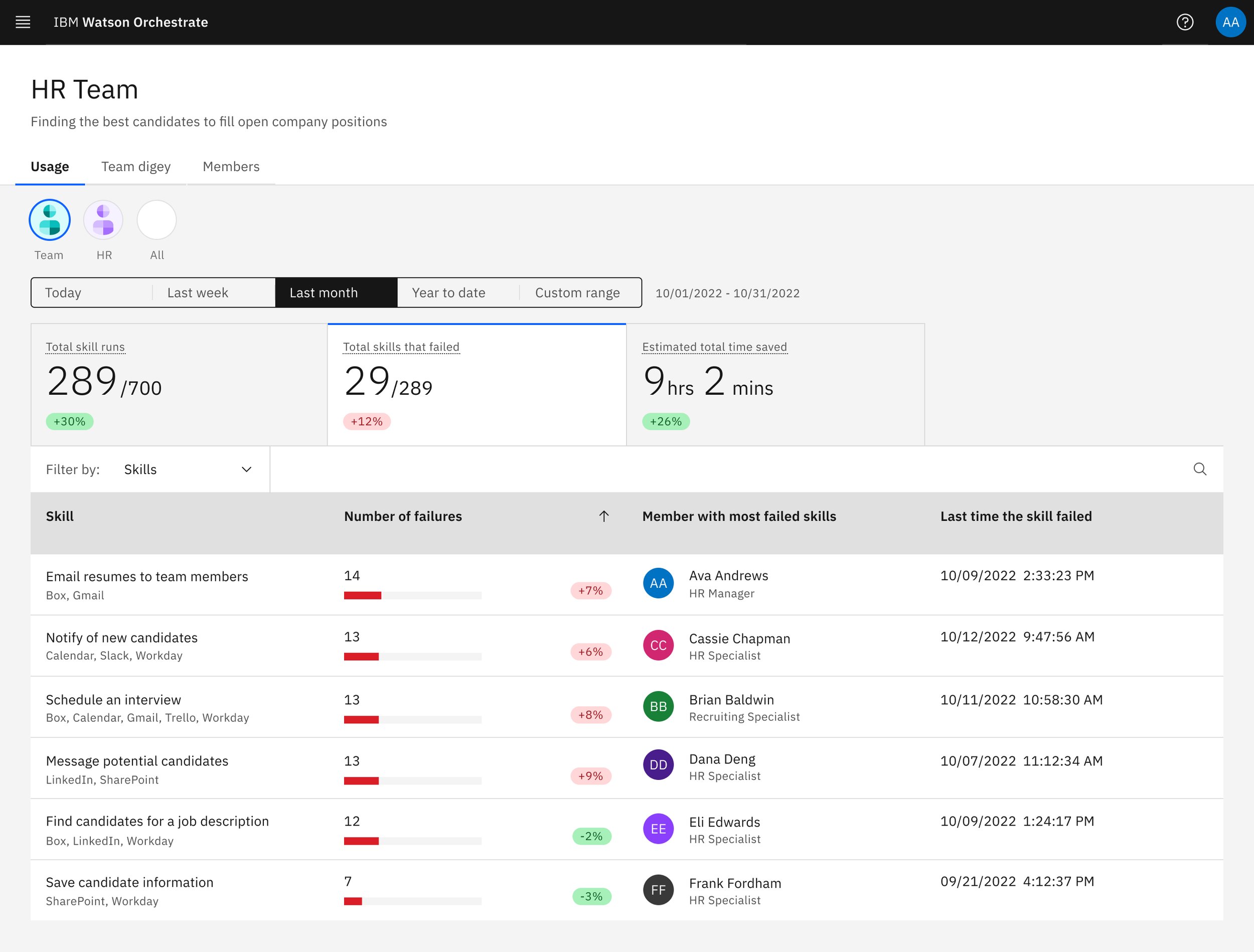

What skills have errors or fail to run

How many times skills are used—and how many more times they can be run before her team reaches a limit

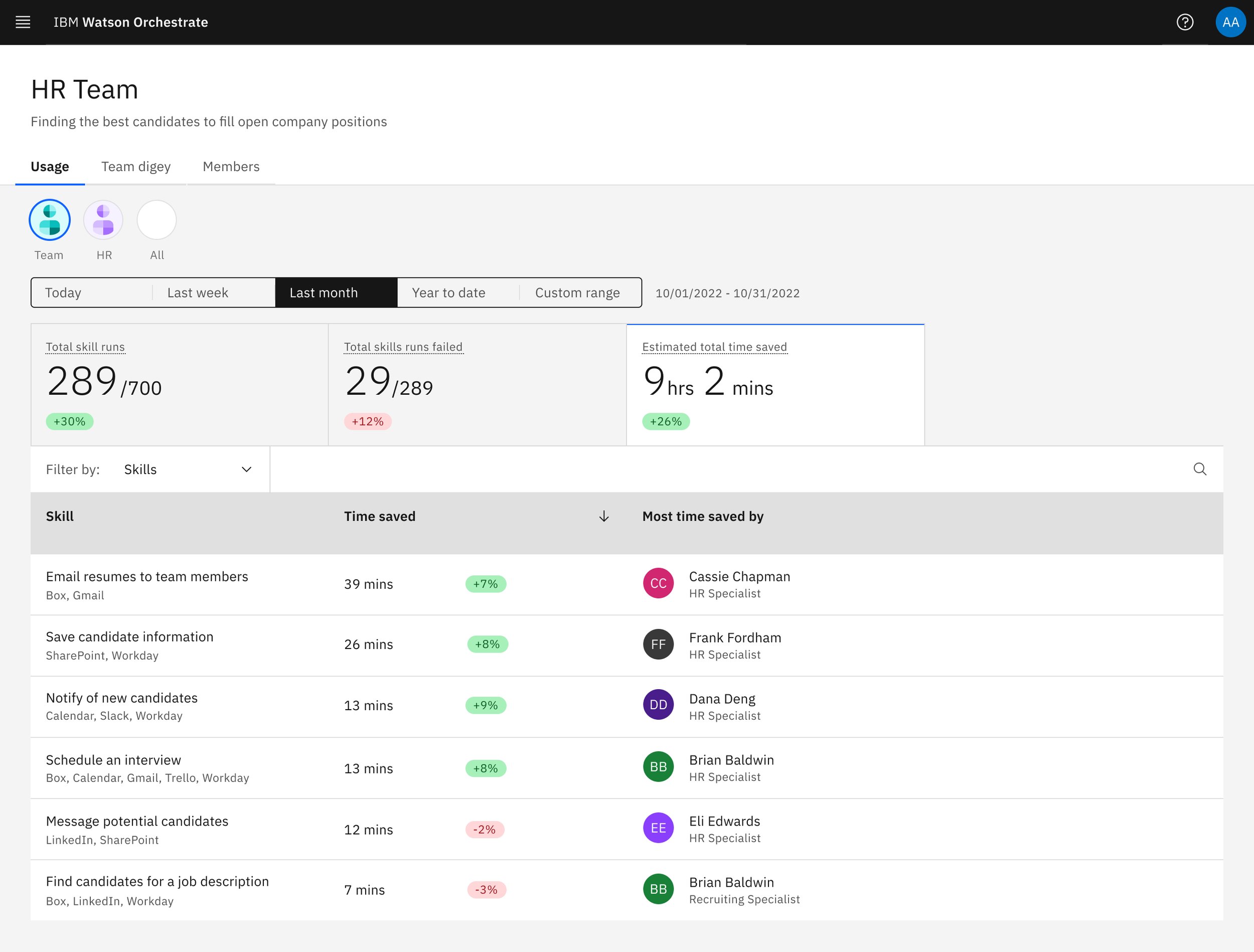

Time saved using the skills and automations

What skills her team is using

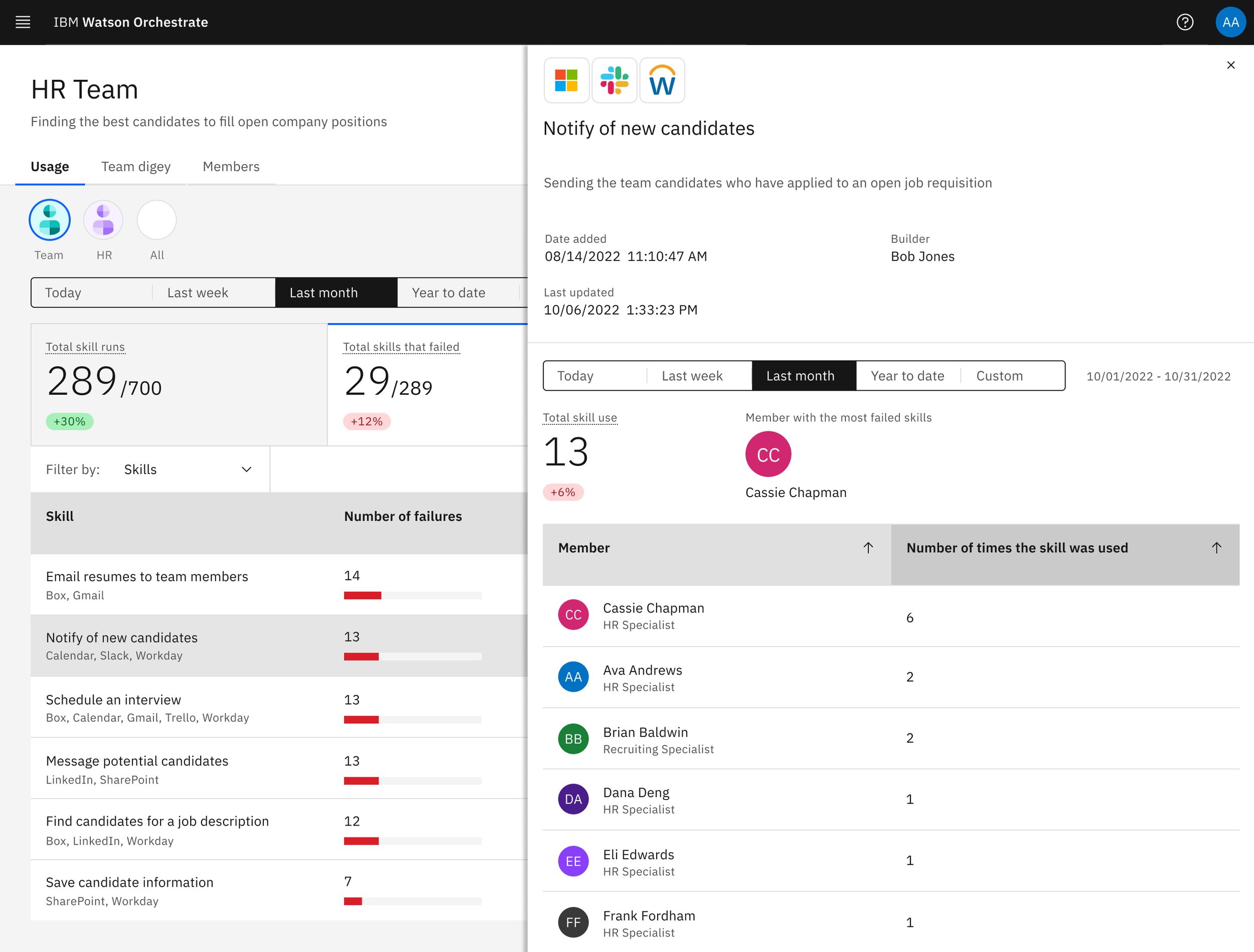

Here, Ava can see all the skills being used by her team digey—including how often, when they failed, and the time saved.

Here, Ava can click into an error to see what went wrong. Unfortunately, finding the cause of the error was deemed technically unfeasible, but it was a nice idea.

managing skills

In the Team digey tab, Ava must be able to manage the skills that the team digey uses. This means adding skills from a skill catalog and connecting apps with login information. Click on any image to view larger.

The digey tab, where Ava can see all the skills her team digey currently uses.

The skill catalog, where Ava can choose skills based on apps. Design by Vikki Paterson and Gary Thornton.

In the skill catalog, Ava can see all skills relating to a particular app. She connects to a skill, then provides her login info so digey can use the skill on her behalf.

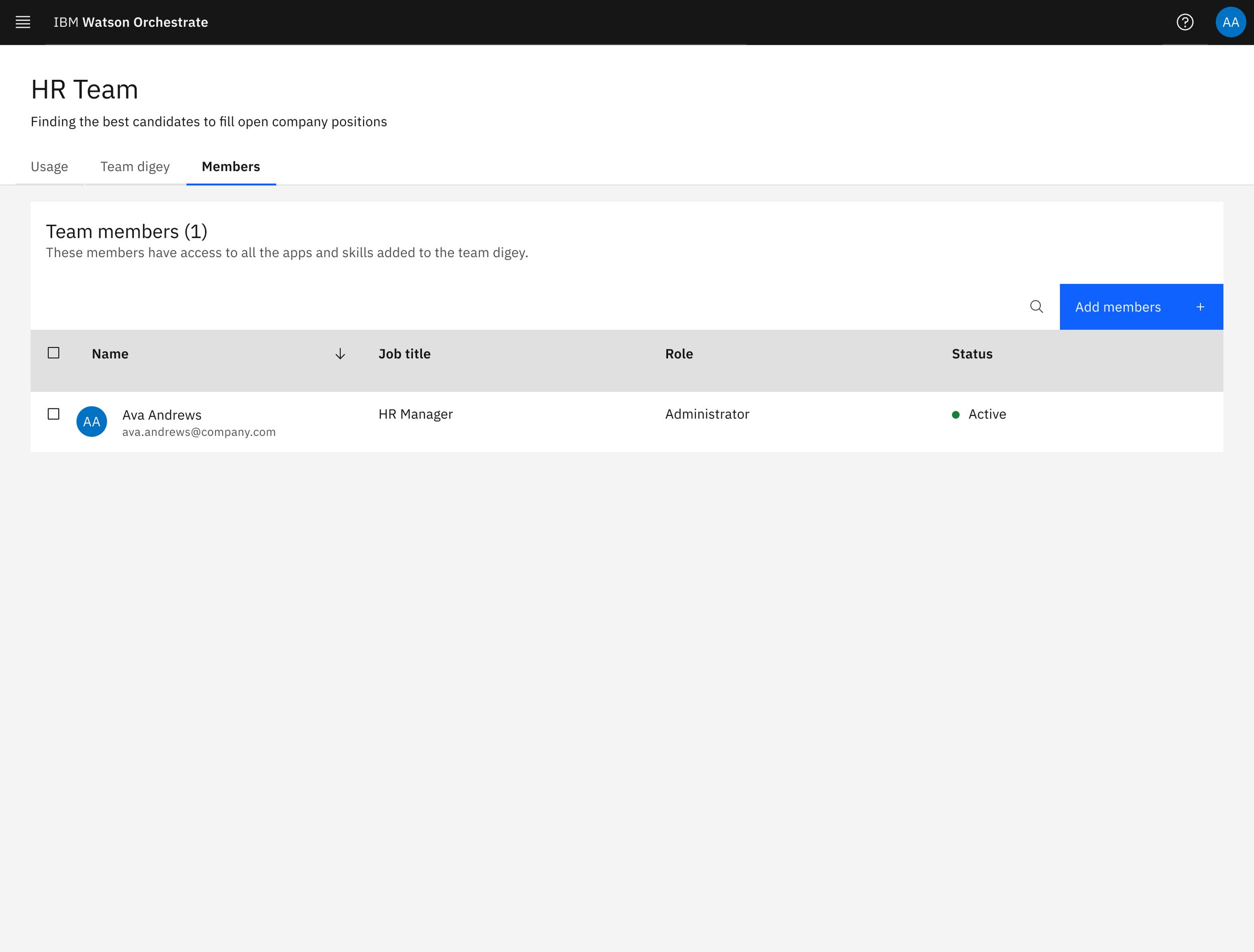

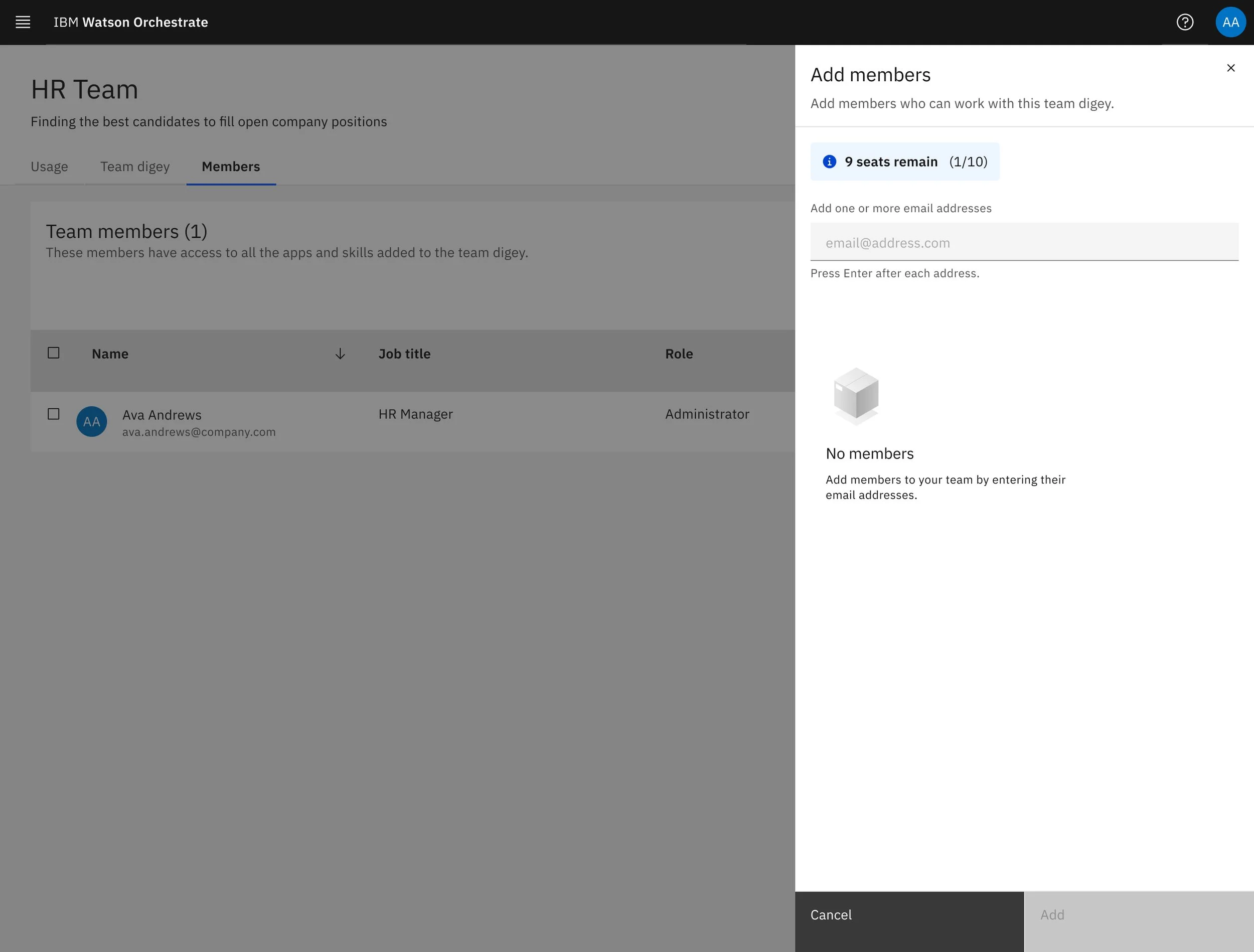

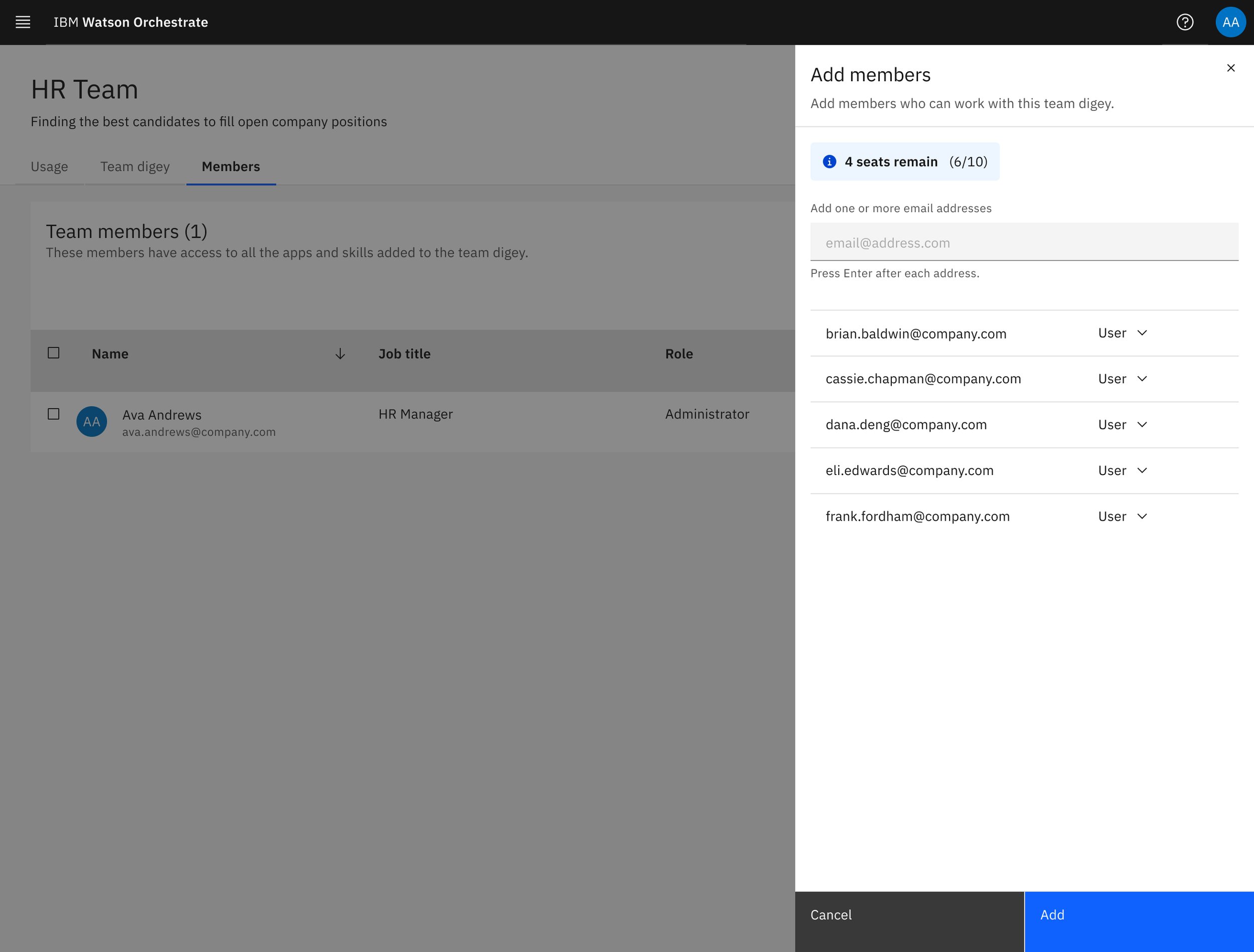

managing team members

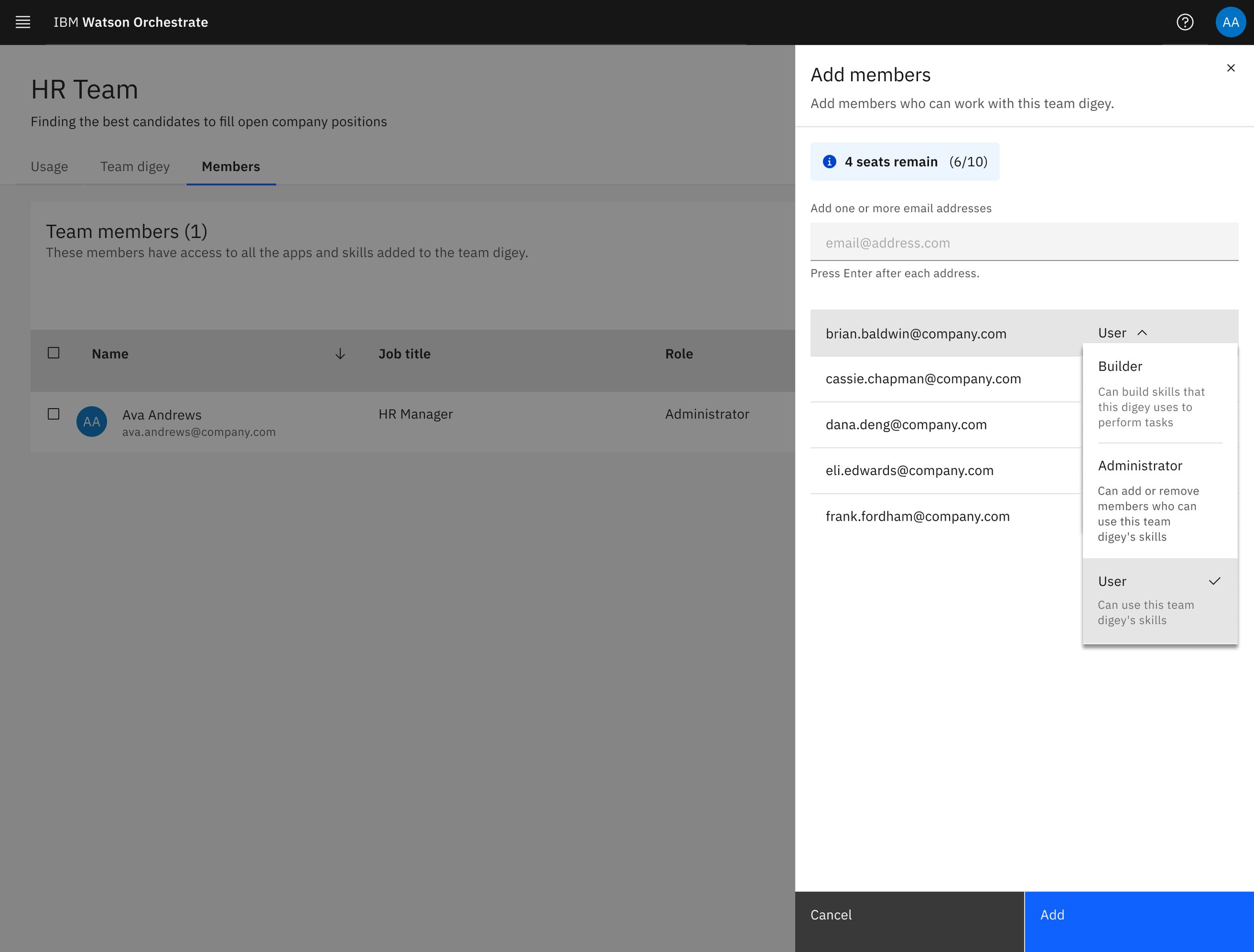

Low fidelity designs showing how Ava adds team members to the team digey.

On the member page, Ava can add members, remove members, and manage roles.

additional challenge: delivering under time constraints

A couple months in, it became clear that we would not be able to hit our six-month goal. I worked with product management and engineering to create our minimum viable product (MVP) that answers the user’s needs and doesn’t sacrifice the experience.

Final Design

meet your digital teammate

We conducted six rounds of user testing with four Ava’s—all HR managers—every two weeks for twelve weeks. With their feedback, we crafted the final experience.

the usage dashboard

Ava can view the number of times a skill is run or failed, and which team members use it. We removed the storage tab due to technical constraints.

User feedback

”Clear information. I like seeing who’s having difficulties. I’d use this page to see where I need to work on things.”

“Easy to use. So many other tools are hard to see the important info, but this is really good.”

“We have so many dashboards to look at. I like that I can toggle between members and skills.”

managing skills

Ava can see her team digey’s skills, connect apps, and add more skills from the skill catalog.

User feedback

“This is set up the way I want it—I like knowing the connections.”

“Pretty easy and straightforward.”

“I like that you can see the apps, though it could use more information about Member or Team logins.”

managing team members

Ava can add team members, set their roles (User or Admin), and remove them if need be. Added team members have access to the full team digey.

User feedback

“This is super clear to read. It’s clean, and I like that it shows what members are active.”

“Five out of five!”

“Easy to use, and I wish you had a way to export the data.”

Watson Orchestrate in action! Visual design by Myriam Battelli. Skill catalog design by Gary Thornton and Eva Dage.

Watson Orchestrate went on to win a CES Innovation Award and three Indigo Design Awards, and was a finalist in the IXDA “Disrupting” tech category.

A brief review

What i learned

Every project and team teaches me something new about design. Here’s what went well, what went wrong, and what I learned while working on Lightsaber: Watson Orchestrate:

Information architecture is king. Creating an IA diagram is probably one of the first things that should be done on a project—it tells you where everything is in relation to everything else.

Get your roots right: Nail all terminology at the beginning. This product struggled at first because the team could not come to a consensus on what terminology to use—whether access roles should be User or Member, whether Watson Orchestrate could be called a digital worker or a digital teammate or a digital assistant, etc.

Optimize user testing. Initially we did user testing every two weeks with the same four people, but that stopped working as they became too familiar with the product and our cadence didn’t allow us enough time to explore and correct mistakes. Next time, we might try a three-week cadence with a wider, rotating cast of users.